While browsing the accepted papers list of ICML 2018, I discovered this paper ‘Racing Thompson: an Efficient Algorithm for Thompson Sampling with Non-conjugate Priors‘ by Zhou, Zhu, and Zhuo. Thompson sampling is a popular algorithm for exploration-exploitation tradeoff problems and is also known as Bayesian bandits. I decided to write my Sunday Reading Notes post on this paper because have been interested in the exploration-exploitation tradeoff for a while and explored this topic through Bayesian optimization and my WSDM’19 paper on sequential A/B testing.

Suppose we want to identify the best arm among arms and we have some prior knowledge about their rewards

Thompson sampling (TS) balances exploring unexplored arms getting rewards from arms already yielding high rewards by choosing the

th arm according to its the posterior probability of being the optimal arm

The computational challenge is to compute the probabilities $P_{it}$. Because TS is often used as an online algorithm, efficient calculation of the posterior probabilities is very important. In the conjugate prior case, this calculation is done in

. With non-conjugate priors, I have seen in the literature that people using Markov Chain Monte Carlo (MCMC) and sequential Monte Carlo (SMC). The authors recognize this probability as an expectation and propose to use an Importance Sampling (IS) step combined with Gumbel-Max trick, which transforms the sampling problem to an optimization problem, to sample

at time

according to probabilities

The benefits of this IS step comes from flexibility to choose $B_t$ at each time step and also the authors leveraged the stopping rule of racing algorithms to deterime the number of IS samples needed to approximate the expectation.

The resulting algorithm, which combines benefits from Importance Sampling, Gumble-Max trick, and the racing algorithm, is proved to be PAC, which is asymptotic good in the sense the total variance distance between the true value

and its estimate converges to zero.

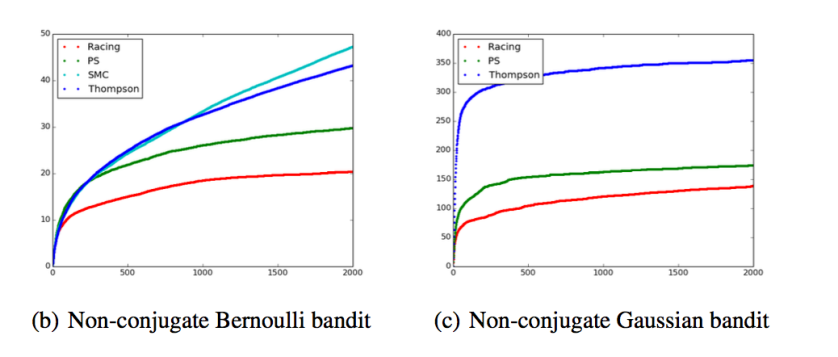

What I find very interesting from the regret analysis section is the fact that the racing TS in this paper can provide much lower regret compared to Thompson sampling and prior-swapping (PS) even though it uses much few particles than SMC and PS. It is not intuitive to me why this should happen. But upon a little further investigation, I found that the priors used for TS and PS & Racing are different in both plots. For ease of implementation, the authors have chosen a conjugate prior for TS. This leaves me wondering what the results would be if we were to use MCMC or SMC with more particles as the baseline for the regret analysis.

References:

- Zhou, Y., Zhu, J. & Zhuo, J.. (2018). Racing Thompson: an Efficient Algorithm for Thompson Sampling with Non-conjugate Priors. Proceedings of the 35th International Conference on Machine Learning, in PMLR 80:6000-6008